Projects within the Web3 AI sector could remain niche unless they adapt to global principles espoused by national governments and transnational corporations.

As the rapid evolution of artificial intelligence (AI) unfolds, two distinct ecosystems are charting their own courses: traditional tech giants and governments on one hand, and Web3-native AI projects on the other. While both share a vision of an AI-augmented future, their values and guiding principles are increasingly at odds, which raises serious concerns about the longevity of Web3-based AI applications.

Bitcoin is currently the only example of a crypto or Web3-based global solution to transcend into the zeitgeist without compromising its core principles.

However, stablecoins and decentralized finance, those Web3 technologies currently vaulting into common financial dashboards of the developed and developing world alike, have made tremendous compromises to achieve their success.

Web3 AI solutions will need to make similar compromises to find their success, as well.

Centralized AI: Safety, Equity, and Governance

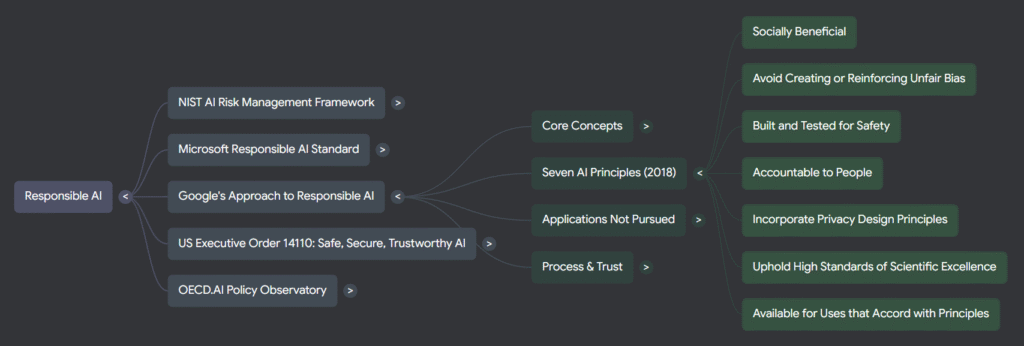

Governments and major technology firms like Microsoft are aggressively formalizing frameworks for “responsible AI.”

Microsoft, for example, has published internal standards aimed at preventing AI from reinforcing societal inequities. Likewise, the OECD’s AI policy dashboard prioritizes human rights, fairness, and safety.

These initiatives suggest that compliance, transparency, and harm mitigation are seen as essential pillars of long-term AI deployment, and should be prioritized by tech firms seeking mass adoption of their solutions. AI systems must not only deliver results but also align with regulatory expectations and ethical norms.

Web3 AI: Incentives, Openness, and Decentralization

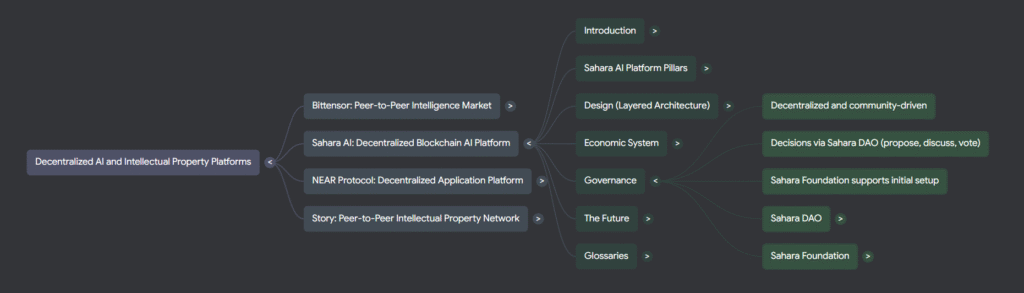

In contrast, Web3 AI projects like BitTensor, Sahara AI, Near, and Story Protocol focus more on decentralization, open participation, and tokenized incentives.

Equity, as defined by traditional institutions, is rarely mentioned in these projects’ documentation. Instead, they lean on permissionless access and market-driven models to foster what they view as a more “neutral” form of inclusion.

BitTensor, for instance, does not explicitly account for social inequalities or user safety. Its emphasis is on providing rewards to anyone who contributes compute or intelligence without any centralized oversight.

Similarly, Near champions decentralized infrastructure and permissionless access, focusing on the technical and economic layers rather than social governance.

Story Protocol adds another layer of experimentation, with a universal IP repository and the inclusion of AI agents as validators, suggesting a future where content and governance could be shaped by autonomous systems.

A Fractured Future or Converging Paths?

The tension between these two frameworks centralized responsible AI versus decentralized incentivized AI presents a challenge for interoperability and scalability.

If Web3 projects want to be more than experimental sandboxes, they will likely need to align with evolving global standards. Regulatory pressure and public trust demands are already reshaping how AI systems are built and deployed across borders.

The hypothesis emerging from current comparisons is clear: mass adoption of Web3 AI may require a shift toward shared norms around safety, accountability, and compliance. Otherwise, these projects risk racing merely to the periphery of innovation, but ultimately incompatible with the systems the majority will use.

This study will explore in much greater detail the guidelines and frameworks which global players are suggesting for AI tech, and the principles-in-action which Web3 AI projects are being powered by on a human level. The goal here will be to demonstrate how the globally adopted Web3 solutions (stablecoins, DeFi) excepting Bitcoin have needed to compromise meaningfully with incumbents to find success – and why Web-3 based AI solutions will be no different.

Responsible AI: Will Web3 Learn The Meaning? — Swept Media

[…] Web3 AI Might Die Without […]