As AI reshapes everything from search engines to smart contracts, the concept of Responsible AI has emerged as critical ground for its future. Government agencies, tech giants, and international standards bodies are building frameworks designed to ensure AI is safe, transparent, fair, and trusted.

However, Web3 AI project teams often seem to believe that they operate outside these spheres. That raises a fundamental question: if Web3 AI wants to reach the mainstream, will it have to compromise with mainstream Responsible AI standards?

The answer seems to be yes.

Here, we will explore the status quo of Responsible AI that is touted by big tech and the US government as standards for how AI tech should be built and implemented across society. This is the benchmark for decentralized Web3 which, although may feature teams that span jurisdictions and are not necessarily pinned down by one, will be influenced to a greater degree over time by Responsible AI standards from their potential big tech clients and national governments.

The follow up to this will explore the Web3 side, with its inherent advantages and bottlenecks to mainstream adoption.

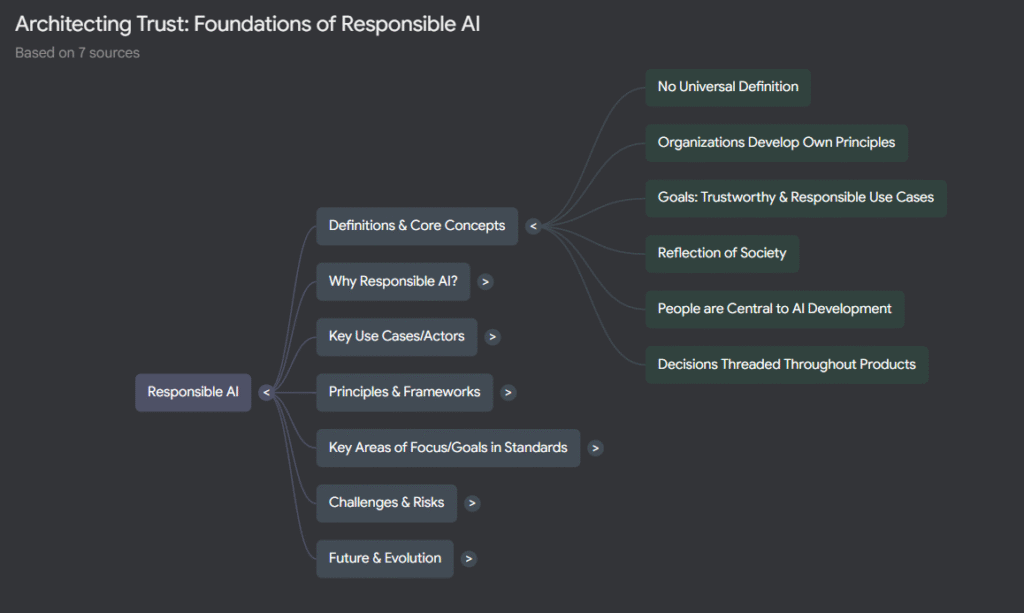

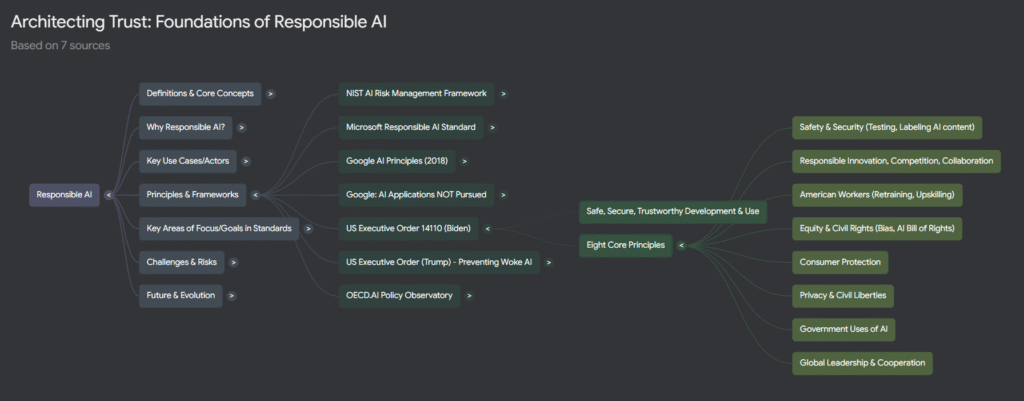

Foundations of Responsible AI: Universally Shared Principles

Across institutional frameworks from Google, Microsoft, NIST, or the U.S. government, there’s strong alignment around a core set of principles:

- Safety: Robust testing, risk mitigation, and failure handling

- Fairness: Avoidance of bias and equitable outcomes

- Transparency: Systems should be explainable and users informed

- Accountability: Mechanisms for oversight, monitoring, and appeal

- Privacy: Protection of individual data and consent controls

- Governance & Continuous Improvement: Frameworks evolve as AI technologies and risks do

Even tools like Harvard’s “Responsible AI” guidance emphasize that responsible practices enhance both outcomes and public trust which are vital for scaling any AI-driven product.

Institutional Playbooks: What the Frameworks Say

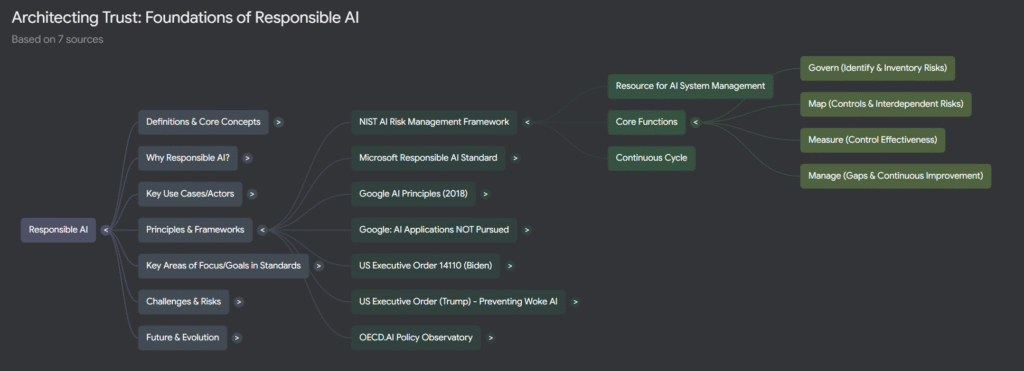

NIST’s AI Risk Management Framework

NIST lays out a structured pathway including the core factors of Govern, Map, Measure, Manage to help organizations inventory risk, assess potential harm, monitor outputs, and iterate responsibly. This lifecycle approach sees Responsible AI not as a checkbox, but as competitive and sustainable practice.

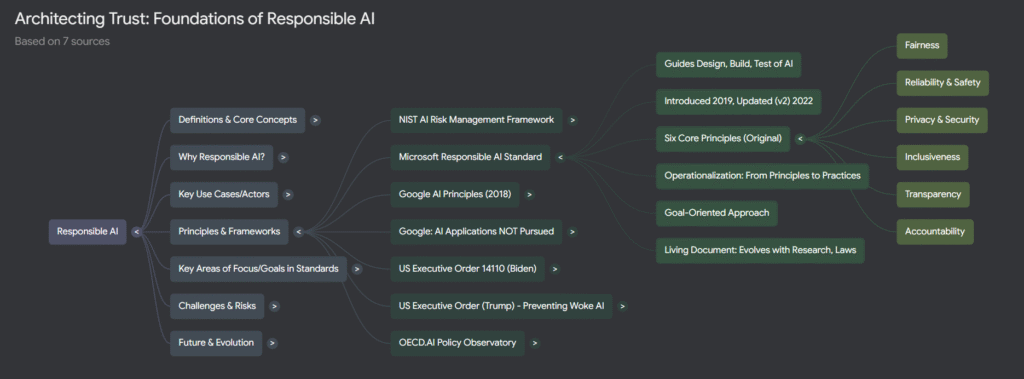

Microsoft’s Responsible AI Standard

Microsoft operationalizes its six principles fairness, reliability, privacy, inclusiveness, transparency, accountability with measurements and oversight baked into the product development lifecycle, from policy to deployment and incident response.

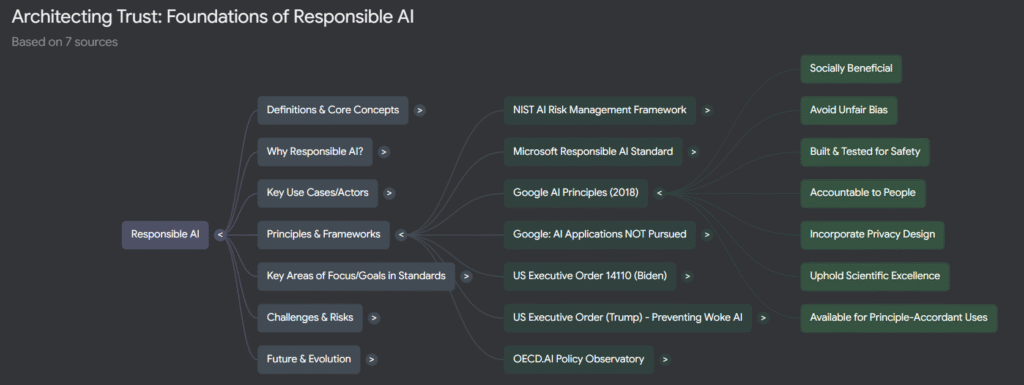

Google’s Principles

Google insists that “responsible AI equals successful AI,” committing to social benefit, safety, privacy, and scientific rigor while explicitly steering clear of surveillance, autonomous weapons, or any misuse of its technology.

U.S. Executive Orders

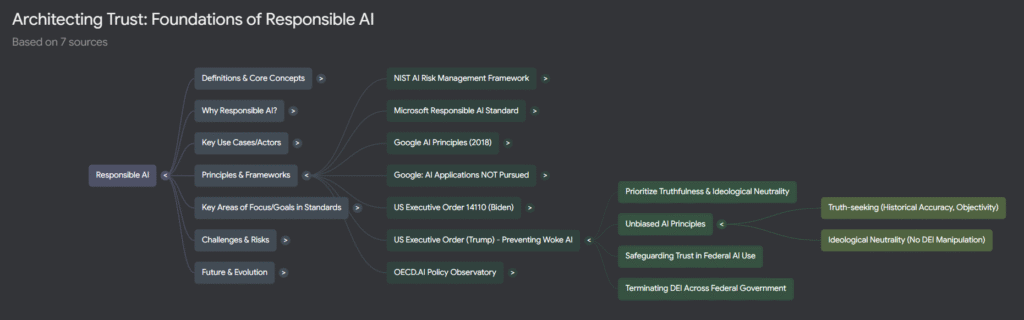

The executive orders from both the Biden and Trump administrations outline divergent but overlapping priorities. Biden emphasizes worker protections, equity, and international collaboration, while Trump’s 2025 Executive Order focuses on truthfulness, ideological neutrality, and eliminating DEI layers, especially in federal AI use.

These Executive Orders differ tremendously in their human focuses. Biden’s order demanded that AI tech developers ensure that civil equality and fairness of outcomes be built into their solutions. Also, the tech should not threaten the stability of the public peace, but rather enhance systems that exist.

Contrast that with Trump’s Anti-Woke Executive Order which demanded that AI tech not be driven by Diversity, Equity, and Inclusion (DEI) initiatives within the teams designing and building their solutions. AI tech should not threaten human jobs, and should always strive to do good for society, but that immutable factors about an end user should not be a determining factor in how the AI solutions operate inasmuch as they do not interfere with other superseding principles.

Why Mainstream Adoption Demands Conformity

Web3 AI platforms often highlight openness, decentralization, and tokenized governance as differentiators. But that alone will not cut it in a world where AI adoption is tightly regulated by institutions, enterprises, and public policy.

I have previously made my point clear: mainstream exposure requires alignment. Without some compromise with accepted safety, privacy, and transparency frameworks, Web3 AI risks remaining siloed within niche circles, cut off from enterprise contracts, public trust, or regulatory approval.

This goes beyond mere certification getting exposure to developer funds, data partnerships, hosting on regulated infrastructure, or inclusion in enterprise stacks requires trust and accountability, qualities only visible through alignment with the frameworks.

It’s not to say that Web3 AI should give up on core Web3 principles of privacy and decentralization – not at all. We will explore the Web3 side of things in the next installment of this series about Responsible AI.

Comments are closed